"Decoding Attention" Chapter 1 - A learning material of Transformer for software engineers

As I promised in the last post, I’ve started building a learning content about Transformer for software engineers who don’t have machine learning background.

tl;dr - “Decoding Attention“

“Decoding Attention” is the course name and I’ve published the chapter 1:

“Attention” is the core of the majority of the current AI systems and the AI architecture handling Attention very well is called “Transformer”. Now, Decoding Attention is intended to describe Attention/Transformer to non-ML (Machine Learning) experts so that they can start their journey to the AI-era.

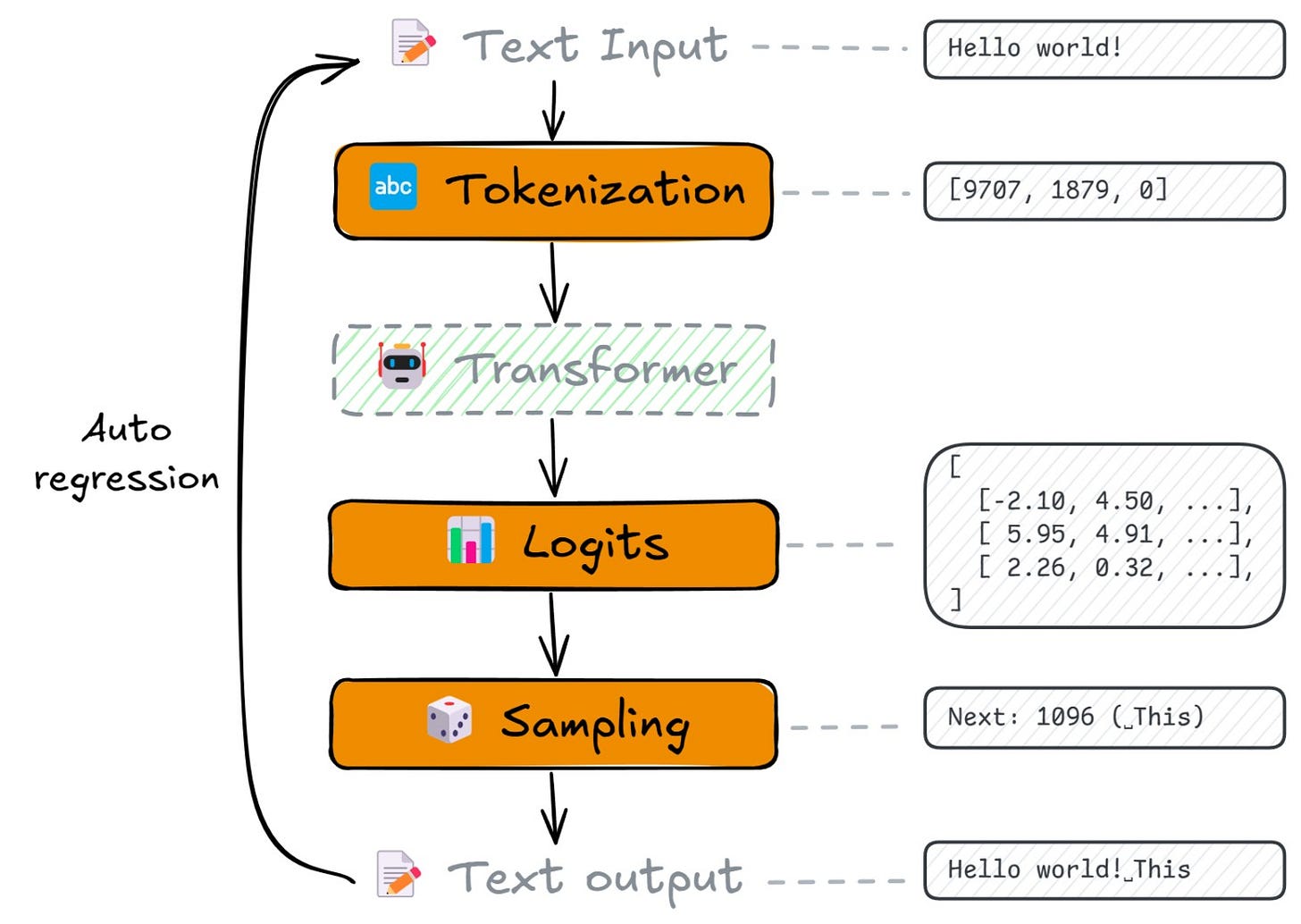

The first chapter of Decoding Attention is explaining the input and output of Transformer model so that you can at least understand Transformer as a black box function for now. As a software engineer, understanding a new thing from its in/out should be the straightforward method, I believe:

Live demo: https://decoding-attention.opsbr.dev/chapter1

Note: It’s running on a Raspberry Pi 5, so it might be disconnected sometime!

Why do I need to understand Transformer? I’m a software engineer!

To answer this question, I’d like to introduce Andrej Karpathy’s “Software 3.0” idea.

Software 3.0

We, as a software engineer, have been working on Software 1.0 for decades and “Software was eating the world” really. However, about 10 years ago, probably because of Deep Learning, the neural network started eating the world, like visual recognition, machine translation, etc. — Software 2.0 began. Now, for the last few years, LLMs are becoming much popular and the natural language is the hottest programing language today i.e. Software 3.0.

However, this doesn’t mean all Software 1.0 territory is taken by Software 2.0 or 3.0. Today’s best applications need all phases so that you should know all of them to build a good application today. Thankfully, you already know Software 1.0. So, you can focus on learning Software 2.0 and 3.0, then you’ll be the top talent of software engineer today.

Transformer

Both Software 2.0 and 3.0 are backed by neural network (that we’ll learn later in Decoding Attention), especially Transformer architecture. People who build Software 2.0 today is basically machine learning experts and that hasn’t been changed even since before Software 2.0 began because it requires careful ML training design and execution. However, these days LLM’s training is being democratized thanks to “post” training phases. Without fully training LLM from scratch, you can fine-tune the existing models (and that was the breakthrough of ChatGPT). I don’t say it’s super easy for everyone so far, but it is getting easier e.g. Text-to-LoRA is opening this by just typing natural language. That’s why I’m very interested in post training today.

Software 3.0 is already open for everyone even who doesn’t have any coding experience e.g. I really love this video — 9-13 years old kids can vibe-code. However, it’s still rough, and just like you understand OS/CPU/etc. as a software (1.0) engineer, understanding the foundational system is quite useful to build sophisticated applications. Andrej called it “LLM OS” that I love, too:

So, going back to the original question: “Why do I need to learn Transformer?” My answer is because it’s core of Software 2.0 and 3.0.

At least to me, who didn’t know Transformer at all 2 months ago, this understanding gives the clear picture of LLM OS and it’s the basic of understanding Software 2.0 stack (I’m learning post training now). Thus, I’d love to share the same experience to you.

What’s next?

I’ll keep publishing the remaining chapters. The next chapter is one layer deeper — “Embedding”. Stay tuned!